My fascination with compilers started when I built a programming language from scratch, along with a compiler for it. Back then I hadn’t considered that the same technology used for programming languages could have applications to big data. I now spend my days at Alvin helping develop a compiler that automatically extracts data lineage from our clients’ data systems. We believe our focus on the accuracy of the lineage we generate will unlock many new and exciting use cases for data driven companies!

This post introduces how this kind of technology can be used to build data lineage.

But before that, let’s start with some concepts.

What is a compiler for a programming language?

When you run a piece of code for a programming language it converts your code to another language that can be understood by your machine. This transformation is made by either a compiler or an interpreter.

Regardless of their difference, they both use a specification of the target language, check if the entered code is correct, and translate it to an intermediate code (like Bytecode in Java) or the machine code itself. A key part of a language specification is its grammar.

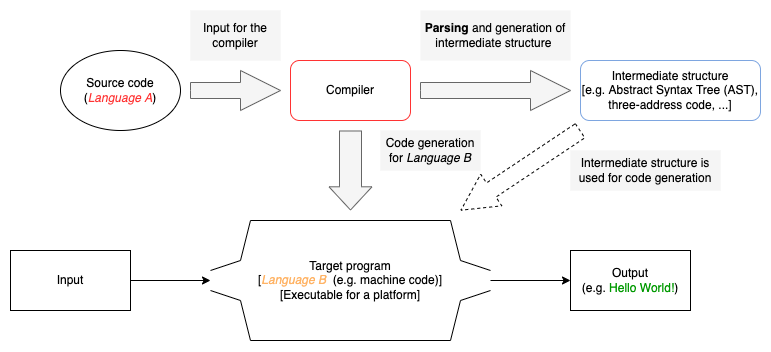

This image shows a simplified workflow of the compilation and execution processes:

- The source code is processed by the compiler that generates an intermediate structure;

- Having the intermediate structure, the compiler generates the target program;

- In this example, the target program is an executable software for a platform.

- The generated program can receive input and generate an output, like the famous “Hello World!” message in the terminal;

What is grammar in a compiler context?

A grammar defines a set of rules that determines if an input is a valid source code for some specific programming language. The notation for each rule is as follows:

rule_name -> rule_body

| alternative_rule_body_1

| alternative_rule_body_2;

For instance, if we have the following grammar containing only one rule with three possible productions:

start_rule -> “foo”

| “bar”

| “recursive abc ” start_rule

We can check if some input is according to this grammar’s rules. For example, to check if the input “recursive abc bar” is valid:

- Starting at start_rule, find where part of the input has a match;

- The first character sequence “recursive abc” is according to the grammar in the 3rd production, i.e. start_rule -> “recursive abc ” start_rule.

- Since it also expects a new call to start_rule, the rest of the input (“bar”) is according to the grammar in the 2nd production: start_rule -> “bar”.

- Therefore, the input “recursive abc bar” is valid for this grammar.

This analysis process is called parsing and it is performed by a software called parser, that uses a specific grammar to validate and perform additional actions, such as code generation. Also, instead of placing the strings directly in the grammar, it is common to separate them into another structure called lexer — the lexer elements can be defined using regular expressions and are called tokens.

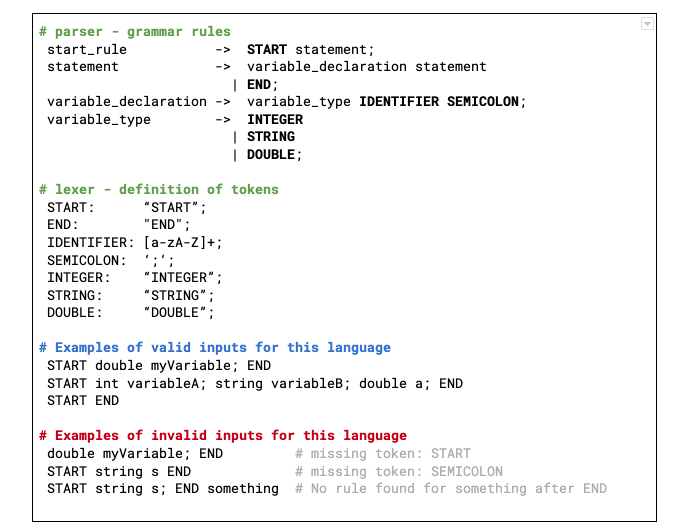

A simple grammar for a language in which you can only declare variables is shown below:

The start_rule indicates that every sentence in this language starts with the token START, which corresponds to the string “START”. Then it is followed by the rule statement that can be derived either in variable_declaration followed by statement or the token END.

Here we can see that a recursive relationship exists because the statement rule can call itself countless times. This is normal and expected for a grammar — the programming languages usually have a more complex grammar (check ANSI-C grammar). However, despite the recursions, it is possible to see that the computation for a valid input ends, in this example, when it finds the char sequence “END” — which corresponds to the token END — at the end of the input.

In this example, we are not generating machine code, but we could easily apply actions when deriving the rules, creating a structure that could be used to generate it. We have some tools to deal with specific grammars and generate machine code from valid inputs, such as:

- ANTLR (ANother Tool for Language Recognition) — According to the official website: “ANTLR is a powerful parser generator for reading, processing, executing, or translating structured text or binary files. It’s widely used to build languages, tools, and frameworks. From a grammar, ANTLR generates a parser that can build and walk parse trees”.

- Flex (Fast lexical analyzer generator) and Yacc (Yet Another Compiler-Compiler) — According to the Flex GitHub’s page, it is a tool for generating scanners (lexer): programs that recognize lexical patterns in text. It is used together with Yacc that receives the token stream from the lexer and, according to The Open Group Base Specifications Issue 7, 2018 edition, reads the grammar specification and additional C / C++ code to generate the parser.

An example of a project using Flex for the lexer, and Yacc for the parser is the Chameleon Programming Language I made with other colleagues when I was in university. In the file translate.y we apply actions to build what we call a Symbol Table (what is a Symbol Table?), mapping all the necessary information for the next steps of the compiler. We generated LLVM code and executed natively on our machines.

Using compiler technology to build data lineage!

You may be asking yourself “but what is data lineage?”

In short, according to Imperva: “Data lineage uncovers the life cycle of data — it aims to show the complete data flow, from start to finish. Data lineage is the process of understanding, recording, and visualizing data as it flows from data sources to consumption”.

In other words, data lineage plays an important role in the data space: it is capable of showing us the dependencies between data, making it possible to identify bugs, implement new features and make changes with more confidence. You can read more about data lineage in other posts from Alvin on Medium, for example The Future of Data Lineage — Beyond a Diagram.

But how can compiler technology be used to make data lineage? What do they have in common?

The answers to these questions are simple: every data manipulation needs to be interpreted in order to extract dependency information. For example, if we want to use SQL query to create a table t3 that depends on data present in other tables, let’s say t1 and t2, then this query needs to be parsed considering some SQL’s dialect grammar (we have a wide variety of dialects such as MySQL, Snowflake, and BigQuery).

Yes! MySQL, Snowflake, BigQuery, and any other platform have their own grammar and we can absolutely use the existing tools made for compilers to extract useful information from the queries to generate lineage.

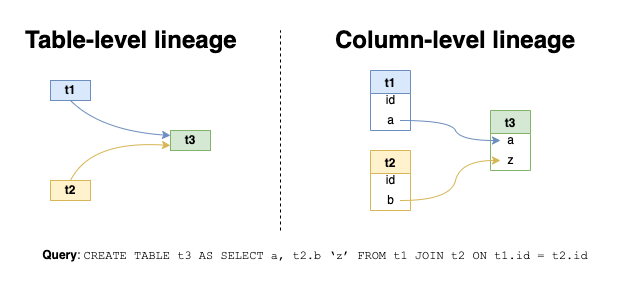

For instance, let’s take a look at this query:

CREATE TABLE t3 AS SELECT a, t2.b ‘z’ FROM t1 JOIN t2 ON t1.id = t2.id

And here it is it’s table and column level lineage:

It is possible to see that t3 is dependent on t1 and t2 tables — the columns a and z of t3 are generated using the values of a of t1 and b of t2, respectively.

But how does it work?

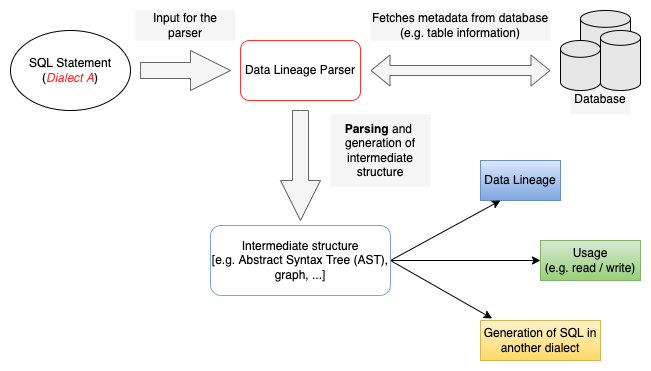

Here we can see a diagram representing the data lineage workflow that has similar characteristics to the compiler workflow we saw earlier. First, the parser receives a query written in some dialect and generates an intermediate structure that can be an Abstract Syntax Tree (AST), a graph, or any other representation that can be used to extract useful information.

Differently from the compiler that generates an intermediate structure to generate a target program, the data lineage parser generates an intermediate structure to extract information about lineage, usage, or even to generate SQL for another dialect, i.e. it does not generate an executable.

Another interesting part represented in this workflow is that the parser sometimes needs to fetch metadata from the database. It is needed because the parser, when dealing with the query, needs to check if the table and the columns exist, store this information that can be used during the parsing (table and/or column references) and after the parsing process, to generate lineage and usage information, for example. You can see in the example of column level lineage presented before that by simply looking at the query we can’t determine if column a is coming from t1 or t2. By fetching information from the database we can verify that only table t1 has a column named a.

What have we learned so far?

To generate lineage from a SQL query we need to parse, generate an intermediate structure, and extract information from it. Since some compiler tools can be used to parse code using a grammar for a specific programming language, and the SQL dialects have their own grammars, we can use the same tools to parse SQL queries as well. Also, sometimes the compiler tools are not enough for building our intermediate structure in the data lineage context, being necessary to fetch metadata from the database.

If you want to try it by yourself, you can grab any available grammar (suggestion: MySQL grammar (ANTLR4)) and use any compiler tool you prefer: ANTLR, Flex & YACC, or any other you want to use. You can apply actions, implement visitors or listeners, and put every useful information into a data structure that can be used to extract useful information like data lineage and usage.

Main References

- Compilers: Principles, Techniques, and Tools

https://suif.stanford.edu/dragonbook/

- What is Data Lineage?

https://www.imperva.com/learn/data-security/data-lineage/

- The Future of Data Lineage — Beyond a Diagram

https://medium.com/alvin-ai/the-future-of-data-lineage-beyond-a-diagram-2b7d3fec6037

- We need to talk about data governance (and introduce Alvin)

https://medium.com/alvin-ai/we-need-to-talk-about-data-governance-and-introduce-alvin-c6c915a3e152